There's a good chance you and your colleagues have had a tortured conversation about customer service surveys.

What type of survey is best?

How many questions should it include?

Are the scores all fair?

That last one is a doozy. Executives worry whether a customer upset about a defective product will “unfairly” give the customer service team a low score on its post-transaction survey. As if the survey is somehow about assigning credit rather than getting unvarnished, actionable feedback from customers.

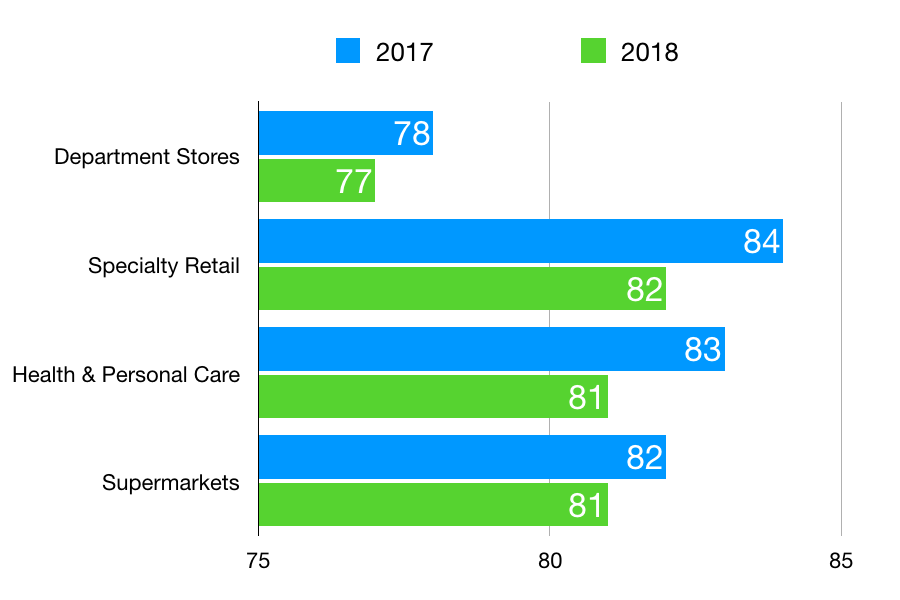

Often lost in these discussions is the one and only true purpose of surveying customers. Customer service surveys are good at measuring just one thing: sentiment.

Here's an overview of what your survey should not try to measure, and why sentiment is all you really need anyway.

What customer service surveys should not measure

Would you use a hammer to change a lightbulb? Probably not—the result would be disastrous. A hammer is a good tool, but it's the wrong tool for the light bulb changing job.

Surveys are often misused in the same way. Here are a few common survey errors.

Error #1: Fact-finding. Surveys shouldn't ask customers for facts, such as how long a customer had to wait to be served. The simple explanation is customers aren't good at remembering facts, so this can quickly skew your results.

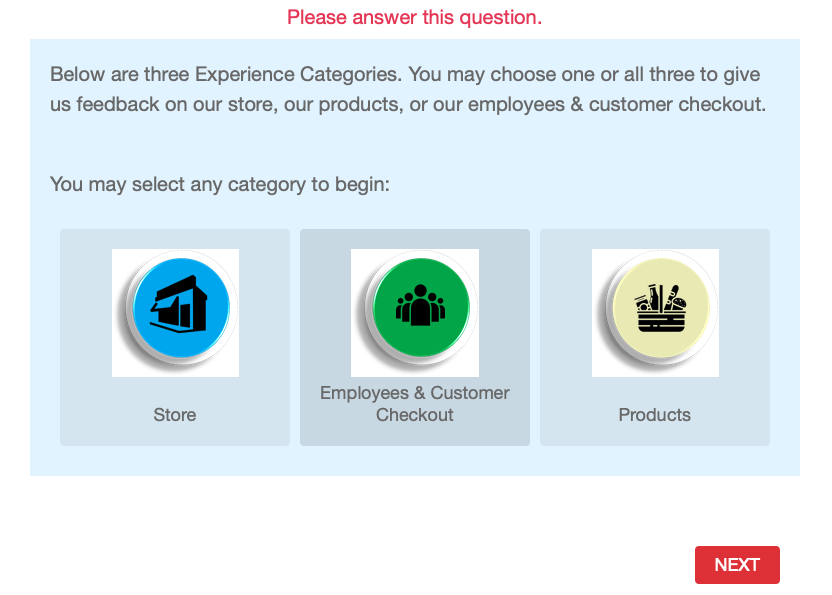

Error #2: Isolation. Some surveys try to isolate customer service issues from other problems, such as defective products, late shipments, or service failures. It's a very company-centric approach that attempts to assign credit (or blame) to various departments without recognizing that all of these functions play a role in the customer experience.

Error #3: Granularity. Many surveys are loaded with 30 or more questions in an attempt to dig deep into the customer's journey. The problem here is two-fold. One, the survey itself becomes a bad experience for the customer. Two, those 30 exhausting questions don't necessarily get at what the customer truly cares about. That’s what the open comments field is for.

Try as you might, surveys just don’t measure these things really well. There are lots of other tools and techniques to gather essential customer experience insights that surveys don’t capture. For example, what customers tell your employees directly is a potential goldmine.

What surveys do measure is how a customer feels. Just like a hammer is great at pounding nails, surveys are a good tool for gauging sentiment.

Why sentiment is an essential insight

Sentiment is a measure of how customers feel about your product, service, or company. Those emotions influence how customers interact with brands in a number of ways, from purchase decisions to word-of-mouth advertising.

A good customer service survey identifies customer sentiment and then helps uncover what's driving those feelings.

Why do customers love us?

Why do customers loathe us?

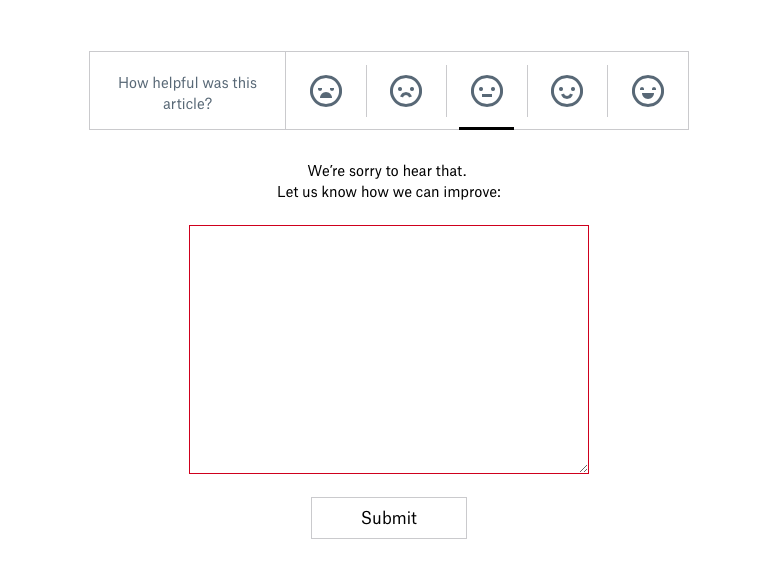

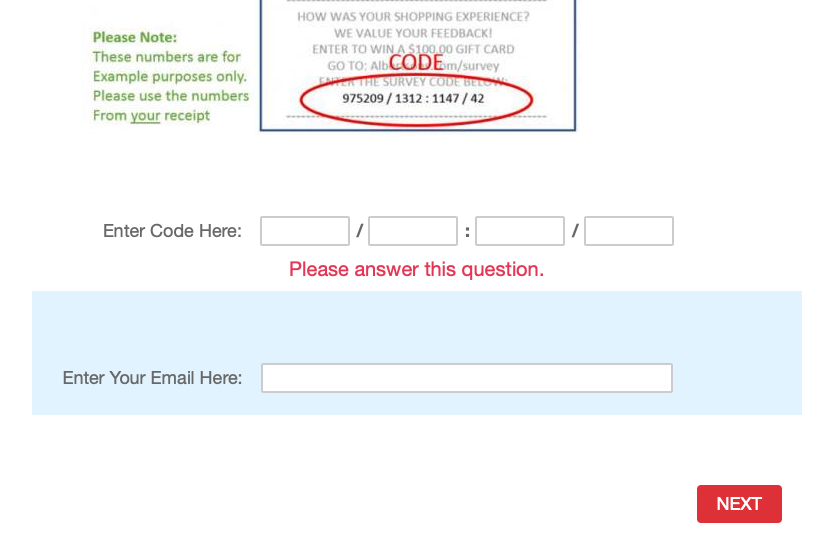

In most cases, you don't need more than two simple questions to uncover how your customer feels.

A rating question.

An open-comment question so customers can explain their ratings.

That's the simple design of most online review platforms. You give a star rating and then write about why you gave that rating. It's up to you as the customer to explain why you feel the way you feel in your own words.

This design also works no matter what type of survey you use. (Here’s a video guide to survey types.)

Compare the two-question design to the typical, bloated survey that constrains customers with 30 nonsense questions about wait times, employee greetings, and would you hire the person who served you that day. The two question survey is easier for customers to complete and easier for you to analyze.

What you can do with customer sentiment

Capturing customer sentiment allows you to do two big things. The first is it helps you find the pebble in your customers' shoes, so you can remove it. The second is you can discover what makes your customer truly fall in love with your brand, product, or service, so you can do that more consistently.

One client used their two-question survey to identify a process that truly annoyed their customers. It was the one thing that was consistently mentioned in negative surveys. So the client investigated the issue and improved the process.

Complaints quickly decreased. Even better, the new process was far more efficient, saving my client valuable time.

The same survey revealed that the happiest customers knew an employee by name. They had made a personal connection with someone, and felt that employee was their advocate. My client leveraged that strength and encouraged all employees to spend just a little extra time connecting with customers on a personal level.

Customer satisfaction rose again. That extra time connecting with customers also dramatically reduced time-consuming complaints and escalations.

Analyzing your surveys doesn’t require advance math, sophisticated software, or hours of time. Here’s a guide to quickly analyzing your survey results.

Conclusion

Customer service surveys get much shorter and far more useful when we remove all the nonsense. Focus on learning how your customers feel and why they feel that way, and you'll have incredibly useful information.

I've put together a resource page to help you learn more.

LinkedIn Learning subscribers can also access my course, Using Surveys to Improve Customer Service. A 30-day trial is available if you're not yet a subscriber.